1. Introducing OpenAI’s Assistants API

1.1 Definition and Purpose of Assistants API

The Assistants API allows developers to build artificial intelligence assistants within their own applications. By defining custom commands and selecting models, assistants can use models, tools, and knowledge to respond to user queries. Currently, the Assistants API supports three types of tools: Code Interpreter, Retrieval, and Function Calling.

1.2 Applications of the Assistants API

The Assistants API is suitable for various scenarios that require interactive AI support. For example:

- Customer Support: Automatically answer common questions to reduce the workload of human customer service.

- Online Education: Answer students’ questions and provide customized learning support.

- Data Analysis: Analyze data files uploaded by users, generate reports, and visualize charts.

- Personalized Recommendations: Provide personalized suggestions and services based on users’ historical interactions.

1.3 Core Concepts of Assistants

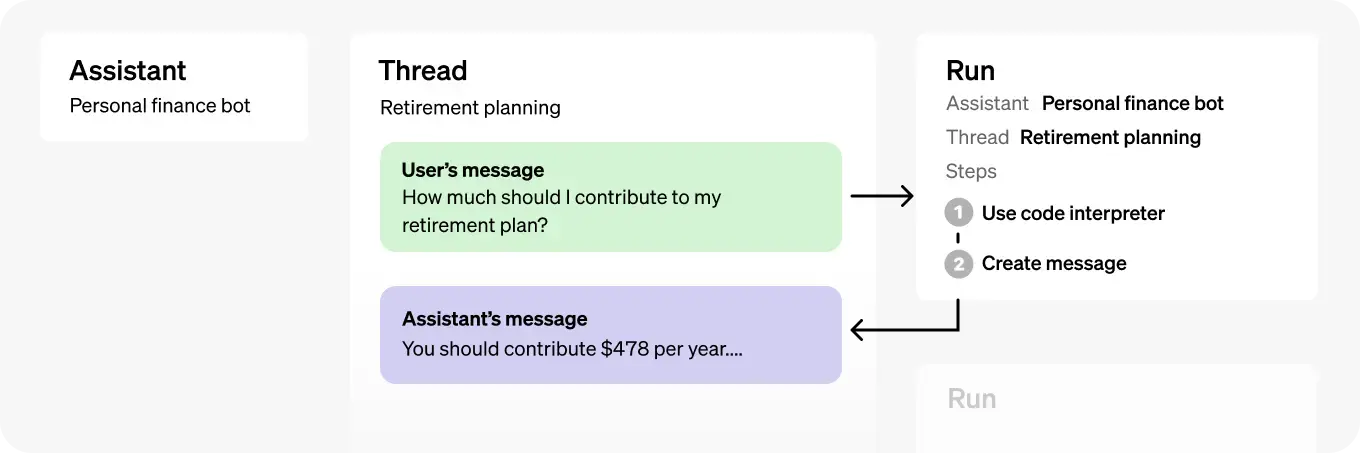

The core objects of the Assistants API include Assistant, Thread, and Message. Here are detailed introductions to these objects and their functions:

Assistant

The Assistant object is built on top of OpenAI models and can call AI assistants’ tools. You can customize the instructions of the Assistant to tailor its personality and functionality. For example, you can create an Assistant called “Data Analyst” that analyzes data and generates charts using the “code_interpreter” tool.

Thread

The Thread object represents the conversation session between the user and the Assistant. You can create a Thread for each user and add messages to it when the user interacts with the Assistant. The Thread object effectively stores message history and truncates messages when needed to comply with the model’s context length limit.

Message

The Message object can be created by the user or the Assistant. Messages may contain text, images, and other files. Messages are stored as a list on the Thread. In the actual usage of the API, developers can add user messages to the Thread and trigger the Assistant’s response as needed.

Run

The Run object represents the execution of an assistant request, calling the assistant based on the message content in the Thread. The assistant utilizes its configuration and the Thread’s messages to execute tasks by calling models and tools. As part of the run, the assistant appends messages to the thread.

2. Development Process of the Assistants API

2.1 Create your Assistant

To create an Assistant, you need to send a request to the API with instructions, model name, and tool configuration. Here’s a simple example of creating a personal math tutor assistant:

curl "https://api.openai.com/v1/assistants" \

-H "Content-Type: application/json" \

-H "Authorization: Bearer YOUR_OPENAI_API_KEY" \

-H "OpenAI-Beta: assistants=v1" \

-d '{

"instructions": "You are a personal math tutor. Write and run code to answer math questions.",

"name": "Math Tutor",

"tools": [{"type": "code_interpreter"}],

"model": "gpt-4"

}'

API parameters:

- instructions - system instructions telling the assistant what to do.

- name - the name of the assistant.

- tools - defines which tools the assistant can use. Each assistant can have up to 128 tools. The current tool types can be code_interpreter, retrieval, or function.

- model - which model should the assistant use?

After successfully creating the Assistant, you will receive an Assistant ID.

2.2 Create a Session Thread

A Thread represents a conversation, and we recommend creating a session Thread for each user when they start a conversation. You can create a Thread as follows:

curl https://api.openai.com/v1/threads \

-H "Content-Type: application/json" \

-H "Authorization: Bearer $OPENAI_API_KEY" \

-H "OpenAI-Beta: assistants=v1" \

-d ''

After creating the Thread, you will receive a Thread ID.

2.3 Add Messages to the Thread

You can add messages to a specific Thread, which contain text and optionally allow user-uploadable files. For example:

curl https://api.openai.com/v1/threads/{thread_id}/messages \

-H "Content-Type: application/json" \

-H "Authorization: Bearer YOUR_OPENAI_API_KEY" \

-H "OpenAI-Beta: assistants=v1" \

-d '{

"role": "user",

"content": "I need to solve this equation `3x + 11 = 14`. Can you help me?"

}'

API parameters:

- thread_id - represents the conversation thread ID, which you can obtain when creating the Thread.

The API request body is a user message, usually representing the user’s question, similar to the message structure of the conversation model.

2.4 Run the Assistant to Generate a Response

To make the assistant respond to user messages, you need to create a Run. This allows the assistant to read the Thread and decide whether to use tools (if enabled) or simply use the model to best answer the query.

Note: Up to this point, the assistant has not responded to the user’s question. Only when you call the Run API will the AI assistant respond to the user’s question.

curl https://api.openai.com/v1/threads/{thread_id}/runs \

-H "Authorization: Bearer YOUR_OPENAI_API_KEY" \

-H "Content-Type: application/json" \

-H "OpenAI-Beta: assistants=v1" \

-d '{

"assistant_id": "assistant_id",

"instructions": "Address the user as Jane Doe. The user is a premium account."

}'

API parameters:

- thread_id - represents the conversation thread ID, which you can obtain when creating the Thread.

- assistant_id - represents the assistant ID, which you can obtain when creating the Assistant.

- instructions - assistant instructions that can override the instructions set when creating the Assistant.

A successful API request will return a Run ID.

2.5 Check Assistant Running Status

After starting a task (Run) in Assistant, the task execution is asynchronous. This means we need to regularly check the status of the Run to determine if it has been completed. To check the status of the Run, an HTTP request can be made using CURL. Below is a specific introduction to this process.

CURL Request Example:

curl https://api.openai.com/v1/threads/thread_abc123/runs/run_abc123 \

-H "Authorization: Bearer $OPENAI_API_KEY" \

-H "OpenAI-Beta: assistants=v1"

API Parameter Explanation:

https://api.openai.com/v1/threads/thread_abc123/runs/run_abc123: This is the request URL of the API, wherethread_abc123is the unique identifier of the thread (Thread), andrun_abc123is the unique identifier of the Run.

Response Body Example:

{

"id": "run_abc123",

"object": "thread.run",

"status": "completed",

"created_at": 1699073585,

...

}

API Response Parameter Explanation:

id: The unique identifier of the Run.object: Indicates the type of the returned object, which isthread.runhere.status: The status of the Run, possible values includequeued,in_progress,completed,requires_action,failed, etc.created_at: The timestamp of when the Run was created.

2.6 Get Assistant Response Results

After the Assistant Run is completed, we can read the Assistant’s response results by checking the messages added to the thread (Thread). Below is a demonstration of how to make the request through CURL and a detailed explanation of the API parameters.

Tip: Similar to a conversation with an Assistant, when the Assistant finishes processing the user’s query, the Assistant will append a message to the conversation thread (Thread). Therefore, we just need to query the latest message in the conversation thread (Thread) to get the Assistant’s response.

CURL Request Example:

curl https://api.openai.com/v1/threads/thread_abc123/messages \

-H "Content-Type: application/json" \

-H "Authorization: Bearer $OPENAI_API_KEY" \

-H "OpenAI-Beta: assistants=v1"

API Parameter Explanation:

https://api.openai.com/v1/threads/thread_abc123/messages: The request URL of the API, wherethread_abc123is the unique identifier of the thread (Thread).- Same as the request headers used to check the Run status earlier, including authentication information and API version information.

Assistant Response Results Example:

In this example, the user asked the Assistant a math question, and the Assistant added a response Message to the Thread after processing it.

User: I need to solve the equation `3x + 11 = 14`. Can you help me?

Assistant: Of course, Jane Doe. To solve the equation `(3x + 11 = 14)`, you need to isolate `(x)` on one side of the equation. Let me calculate the value of `(x)` for you.

Assistant: The solution to the equation `(3x + 11 = 14)` is `(x = 1)`.

After obtaining the response results from the Assistant, it can be presented to the user to help them better understand and utilize the services provided by the Assistant.

3. Tools: Built-in Tools Provided by OpenAI

3.1 Code Interpreter Tool

The Code Interpreter tool allows Assistants API to write and run Python code in a sandbox execution environment. This tool can handle various data and file formats, and generate files with data and graphical images. The Code Interpreter enables your Assistant to iteratively run code to solve complex coding and mathematical problems. When the code written by the Assistant fails to run, it can iterate this code by attempting different code until the code runs successfully.

Enabling Code Interpreter

To enable the Code Interpreter, pass code_interpreter in the tools parameter when creating the Assistant object:

curl https://api.openai.com/v1/assistants \

-u :$OPENAI_API_KEY \

-H 'Content-Type: application/json' \

-H 'OpenAI-Beta: assistants=v1' \

-d '{

"instructions": "You are a personal math tutor. When asked a math question, write and run code to answer it.",

"tools": [

{ "type": "code_interpreter" }

],

"model": "gpt-4-turbo-preview"

}'

Then, the model will decide when to invoke the Code Interpreter at runtime based on the nature of the user’s request. You can facilitate this behavior through the Assistant’s instructions (e.g., “Write code to solve this problem”).

Using Code Interpreter to Process Files

The Code Interpreter can parse data from files. This is useful when you want to provide a large amount of data to the Assistant or allow your users to upload their own files for analysis. Note that files uploaded for the Code Interpreter will not be indexed for retrieval. For detailed information on how to index files for retrieval, refer to the Retrieval Tool section below.

Files passed at the Assistant level can be accessed by all Runs associated with this Assistant:

curl https://api.openai.com/v1/files \

-H "Authorization: Bearer $OPENAI_API_KEY" \

-F purpose="assistants" \

-F file="@/path/to/mydata.csv"

curl https://api.openai.com/v1/assistants \

-u :$OPENAI_API_KEY \

-H 'Content-Type: application/json' \

-H 'OpenAI-Beta: assistants=v1' \

-d '{

"instructions": "You are a personal math tutor. When asked a math question, write code and run code to answer it.",

"tools": [{"type": "code_interpreter"}],

"model": "gpt-4-turbo-preview",

"file_ids": ["file_123abc456"]

}'

Reading Images and Files Generated by Code Interpreter

The Code Interpreter can also output files in the API, such as generating image charts, CSV, and PDF files. There are two types of files generated: images and data files (e.g., a CSV file with data generated by the Assistant).

When the Code Interpreter produces an image, you can find and download this file in the file_id field of the Assistant Message response:

{

"id": "msg_abc123",

"object": "thread.message",

"created_at": 1698964262,

"thread_id": "thread_abc123",

"role": "assistant",

"content": [

{

"type": "image_file",

"image_file": {

"file_id": "file-abc123"

}

}

]

// ...

}

3.2 Retrieval Tool

The Retrieval Tool enhances the Assistant’s capabilities by adding knowledge from outside the model (such as proprietary product information or user-provided documents). Once the file is uploaded and passed to the Assistant, OpenAI will automatically slice, index, store embeddings of your document, and implement vector search to retrieve relevant content to answer user queries.

Enable Retrieval

To enable retrieval in the Assistant’s tools parameter, pass retrieval:

curl https://api.openai.com/v1/assistants \

-H "Content-Type: application/json" \

-H "Authorization: Bearer $OPENAI_API_KEY" \

-H "OpenAI-Beta: assistants=v1" \

-d '{

"instructions": "You are a customer support chatbot. Use your knowledge base to respond to customer queries effectively.",

"tools": [{"type": "retrieval"}],

"model": "gpt-4-turbo-preview"

}'

Upload Files for Retrieval

Similar to the Code Interpreter, files can be uploaded at the Assistant level or at individual Message levels.

curl https://api.openai.com/v1/files \

-H "Authorization: Bearer $OPENAI_API_KEY" \

-F purpose="assistants" \

-F file="@/path/to/knowledge.pdf"

curl "https://api.openai.com/v1/assistants" \

-H "Content-Type: application/json" \

-H "Authorization: Bearer $OPENAI_API_KEY" \

-H "OpenAI-Beta: assistants=v1" \

-d '{

"instructions": "You are a customer support chatbot. Use your knowledge base to respond to customer queries effectively.",

"name": "Math Tutor",

"tools": [{"type": "retrieval"}],

"model": "gpt-4-turbo-preview"

"file_ids": ["file_123abc456"]

}'

3.3 Function Calling Tool

Similar to the Chat Completions API, the Assistants API supports calling functions. Function calling allows you to describe functions to the Assistant and intelligently return the function to be called along with its parameters. When a function call is run, the Assistants API will pause execution, and you can provide the result of the function call to continue execution.

Define Functions

When creating an Assistant, you can define a set of functions for the assistant to call. These functions need to be explicitly specified when creating the assistant object. Each function should have a unique name, description, and parameter specification.

The following code demonstrates how to define two functions using the curl command when creating an assistant:

curl https://api.openai.com/v1/assistants \

-H "Content-Type: application/json" \

-H "Authorization: Bearer $OPENAI_API_KEY" \

-H "OpenAI-Beta: assistants=v1" \

-d '{

"instructions": "You are a weather forecast bot. Use the provided functions to answer questions.",

"tools": [{

"type": "function",

"function": {

"name": "getCurrentWeather",

"description": "Get the weather conditions for a specific location",

"parameters": {

"type": "object",

"properties": {

"location": {"type": "string", "description": "City and state, e.g., San Francisco, CA"},

"unit": {"type": "string", "enum": ["c", "f"]}

},

"required": ["location"]

}

}

},

{

"type": "function",

"function": {

"name": "getNickname",

"description": "Get the nickname for a city",

"parameters": {

"type": "object",

"properties": {

"location": {"type": "string", "description": "City and state, e.g., San Francisco, CA"}

},

"required": ["location"]

}

}

}],

"model": "gpt-4-turbo-preview"

}'

Reading the called functions by Assistant

When a user submits a message to the assistant and the content of the message triggers a function call, you need to read the information of this function call. During this process, the assistant will generate a requires_action status run. At this point, you can retrieve the Run object to obtain detailed information about the function call.

Here is an example of retrieving the Run object, showing how to obtain the information of the function calls:

{

"id": "run_abc123",

"object": "thread.run",

"status": "requires_action",

"required_action": {

"type": "submit_tool_outputs",

"submit_tool_outputs": {

"tool_calls": [

{

"id": "call_abc123",

"type": "function",

"function": {

"name": "getCurrentWeather",

"arguments": "{\"location\":\"San Francisco\"}"

}

},

{

"id": "call_abc456",

"type": "function",

"function": {

"name": "getNickname",

"arguments": "{\"location\":\"Los Angeles\"}"

}

}

]

}

},

...

}

The tool_calls parameter contains the function call information, and you just need to call the corresponding function in your local program.

Submitting function outputs

After executing the function call locally and obtaining the results, you need to submit these results to the Assistants assistant so that the assistant can continue processing the user’s request. When submitting function outputs, you need to ensure that the outputs are associated with the original function calls.

Here is an example code of how to submit function output results:

curl https://api.openai.com/v1/threads/thread_abc123/runs/run_123/submit_tool_outputs \

-H "Content-Type: application/json" \

-H "Authorization: Bearer $OPENAI_API_KEY" \

-H "OpenAI-Beta: assistants=v1" \

-d '{

"tool_outputs": [

{

"tool_call_id": "call_abc123",

"output": "{\"temperature\": \"22\", \"unit\": \"celsius\"}"

},

{

"tool_call_id": "call_abc456",

"output": "{\"nickname\": \"LA\"}"

}

]

}'

Parameter explanation:

- thread_abc123 represents the conversation thread ID

- run_123 represents the ID of the Run object

- tool_call_id represents the ID of a specific function call, which is obtained from the previous tool_calls parameter.

Upon successfully submitting all function outputs, the status of the Run object will be updated again, and the assistant will continue processing and return the final response to the user.